Agentic AI frameworks transform LLMs from conversational tools into autonomous workers that plan, execute, and adapt across complex workflows. The options splits between open-source powerhouses (LangGraph, CrewAI, AutoGen) that offer flexibility and customization, and closed platforms (Amazon Bedrock, Azure AI) that provide enterprise-grade infrastructure. Choose based on three factors: your team's technical depth, need for control versus speed, and long-term vendor strategy. Most enterprises will adopt a hybrid approach—platforms for quick wins, frameworks for competitive differentiation.

Abstract

The advancements from prompt-based generative AI to autonomous agentic systems represent 2025's most significant enterprise AI transformation. While ChatGPT introduced conversational AI to millions, agentic frameworks like LangGraph, AutoGen, LlamaIndex, CrewAI, Amazon Bedrock, and emerging tools like Semantic Kernel, Agno, TaskWeaver, and Haystack enable AI to orchestrate multi-step workflows, make decisions, and coordinate specialized agents without constant human intervention.

I'm Dr. Hernani Costa, founder of First AI Movers, where I help executives navigate AI transformation through my newsletter, which reaches 5,000+ professionals, and consulting work with dozens of companies. Through my research on agentic AI systems and hands-on implementation experience, I've seen firsthand how organizations achieve 20-50% productivity gains when they match the right framework to their specific needs.

This guide cuts through the hype to answer: What are these frameworks? Why should you care now? When do you use one versus another? What are the real advantages, disadvantages, and lock-in risks? And critically, where do you start when open-source flexibility battles closed-source convenience? You'll learn practical decision frameworks, see real implementation patterns, and discover which approach aligns with your organization's maturity, resources, and strategic goals.

What Are Agentic AI Frameworks?

From Chatbots to Autonomous Workers

Agentic AI frameworks provide the architectural foundation for building autonomous AI systems that can perceive their environment, reason about goals, plan multi-step actions, use tools, and learn from feedback. Unlike traditional AI that responds reactively to prompts, agentic systems proactively orchestrate processes—managing complex tasks, making real-time decisions, and coordinating with other agents.

Think of the difference this way: A chatbot answers questions. An agent schedules your meetings, researches competitors, generates reports, updates your CRM, and follows up with stakeholders—all from a single instruction.

These frameworks handle the hard problems: state management across conversations, tool orchestration, memory systems, error recovery, and agent coordination. They transform isolated LLM API calls into production-ready systems that actually get work done.

The Core Components Every Framework Provides

Modern agentic frameworks share common architectural elements, though implementations vary:

Orchestration Layer coordinates how agents break down complex tasks into manageable steps, deciding which agent or tool handles each piece. LangGraph uses graph-based workflows with cycles and branches. CrewAI employs hierarchical task delegation with defined roles. AutoGen enables conversational multi-agent interactions.

Memory Systems give agents context retention across interactions. Short-term memory maintains the conversation state. Long-term memory stores learned patterns, user preferences, and domain knowledge. Amazon Bedrock AgentCore provides sophisticated memory infrastructure designed for production scale.

Tool Integration allows agents to interact with external systems—APIs, databases, file systems, and web browsers. Semantic Kernel excels at plugin ecosystems with native code functions and OpenAPI specs. LlamaIndex specializes in connecting agents to enterprise data sources.

Planning and Reasoning capabilities enable agents to decompose goals, evaluate options, and adapt strategies. Some frameworks use chain-of-thought prompting, others employ reinforcement learning or Monte Carlo tree search methods.

Safety and Guardrails ensure agents operate within defined boundaries—crucial for enterprise deployment. Amazon Bedrock provides built-in guardrails for security and compliance.

Three Framework Categories You Need to Understand

The agentic AI landscape divides into three distinct camps, each serving different needs:

Open-Source Frameworks (LangGraph, AutoGen, CrewAI, LlamaIndex, LangChain, Haystack) provide maximum flexibility and vendor neutrality. You control the code, choose your LLM provider, run on-premises or in the cloud, and customize every component. The tradeoff: higher technical complexity and more DevOps responsibility.

Closed/Proprietary Platforms (Amazon Bedrock, Google ADK, Azure AI) offer managed infrastructure with enterprise support. You get faster deployment, built-in observability, automatic scaling, and compliance certifications. The cost: vendor lock-in and less architectural control.

Hybrid Enterprise Solutions combine both approaches. Microsoft's convergence of Semantic Kernel and AutoGen exemplifies this trend—open frameworks backed by enterprise support. Organizations increasingly use platforms for quick wins while building competitive differentiation through custom frameworks.

Currently, I'm seeing forward-thinking teams adopt open frameworks for strategic capabilities while leveraging platforms for commodity functions—getting best-of-both-worlds flexibility without reinventing infrastructure.

Why Should You Care About Agentic AI Right Now?

The Productivity Revolution Is Already Here

Organizations implementing agentic workflows report productivity gains of 30-45% across content creation, customer service, and knowledge work. This doesn't mean chatbots answering FAQs. It's autonomous systems that handle end-to-end processes previously requiring human judgment at every step.

A marketing agency I consulted with deployed GPT-5's agentic capabilities to automate client content workflows—learning brand voices, scheduling social media, and adapting messaging based on engagement data. Result: 50% faster campaign deployment while maintaining quality. Their competitive advantage expanded from execution speed to strategic positioning.

Meanwhile, executives now consider AI a strategic priority, with the majority believing AI opens new business opportunities. The gap between early adopters and laggards widens daily. Companies deploying agents today build institutional knowledge and workflow patterns that compound, creating moats competitors can't easily replicate.

From Proof-of-Concept to Production Systems

The maturity is visible, it’s happening right now, and it changes everything. Early 2024 saw experimental agent demos. Late 2025 brings production-ready infrastructure with enterprise support.

Amazon Bedrock AgentCore launched in general availability in October 2025, providing the industry's longest runtime (8 hours), framework-agnostic support, and complete session isolation. Microsoft unified Semantic Kernel and AutoGen, creating enterprise-ready multi-agent solutions with stable APIs and production support.

These moves signal that agentic AI crossed the chasm from innovation theater to operational systems. The question shifted from "Will agents work?" to "Which frameworks fit our stack?"

The Competitive Timing Advantage

First-mover advantage matters more here than in typical enterprise software. Why? Because agentic systems improve with use—they learn your workflows, accumulate domain knowledge, and refine decision-making patterns.

Organizations deploying agents in 2025 and early 2026 aren't just automating tasks. They're building proprietary datasets of successful agent interactions, developing institutional expertise in prompt engineering and workflow design, and establishing cultural practices around human-AI collaboration.

By the time competitors implement their first agent, early adopters are on their third iteration with compound learning effects. That's the real competitive moat—not the technology itself, but the organizational capability to deploy it effectively.

From my consulting work, I've seen that companies that wait for "mature" solutions miss the learning curve. The frameworks available today are production-ready for strategic deployment, and the experience gained now determines who leads and who follows in the agent economy.

The Framework Breakdown: What Each One Does Best

LangGraph: Maximum Control for Complex Workflows

LangGraph treats agent interactions as directed graphs where nodes represent states and edges define transitions. This graph-based approach provides exceptional flexibility for complex decision-making pipelines with conditional logic, branching workflows, and dynamic adaptation.

Core Strengths: Stateful orchestration maintains conversation context across multiple interactions. Cyclic graphs allow agents to revisit previous steps and adapt to changing conditions. Time-travel debugging lets developers inspect and modify state at any point in execution. The framework integrates seamlessly with LangChain's extensive tool ecosystem.

When To Use LangGraph: Choose this when you need fine-grained control over agent behavior, complex workflows with multiple decision points, or sophisticated state management. It excels at adaptive customer support systems, multi-step research workflows, and applications that require audit trails for compliance.

Real Implementation: A financial services company used LangGraph to build a loan processing agent that navigates conditional approval workflows based on credit scores, income verification, and regulatory requirements. The graph structure naturally modeled their business logic while providing visibility into decision paths.

The Tradeoff: Steeper learning curve than simpler frameworks. You need to understand graph theory concepts and invest time in architectural design. Best suited for teams with strong engineering capabilities and complex use cases that justify the investment.

AutoGen: Research-Driven Multi-Agent Collaboration

Microsoft's AutoGen pioneered conversational multi-agent systems in which agents communicate asynchronously to solve complex tasks. Born from Microsoft Research, it emphasizes flexibility, human-in-the-loop workflows, and advanced orchestration patterns.

Core Strengths: Event-driven architecture supports sophisticated agent interactions. Customizable agents seamlessly integrate LLMs, tools, and human oversight. The framework supports both autonomous operation and supervised workflows, making it ideal for scenarios that require human judgment.

When To Use AutoGen: Use AutoGen for research projects, prototyping new agent patterns, or workflows combining AI autonomy with human expertise. It shines in collaborative scenarios like multi-agent brainstorming, complex data analysis requiring verification, and academic environments exploring novel agent architectures.

Microsoft's Strategic Shift: In late 2024, Microsoft announced that AutoGen would converge with Semantic Kernel, creating a unified multi-agent runtime that combines AutoGen's cutting-edge patterns with Semantic Kernel's enterprise stability. This positions AutoGen for experimentation while providing a migration path to production-ready systems.

The Tradeoff: Relatively new with an evolving ecosystem. Best for teams comfortable with experimental technology and willing to invest in custom integration work. The research-first orientation means less polish than enterprise platforms but more innovation velocity.

LlamaIndex: Data-Centric Agents for Enterprise Knowledge

LlamaIndex specializes in connecting LLMs with your enterprise data through advanced indexing and retrieval techniques. Originally focused on retrieval-augmented generation (RAG), it evolved into a comprehensive framework for building knowledge assistants.

Core Strengths: Versatile data ingestion from 100+ built-in loaders covering PDFs, databases, APIs, and cloud applications. Multiple index types (vector, tree, keyword, composite) optimize for different query patterns. The framework excels at transforming raw data into queryable knowledge bases that agents can leverage.

When To Use LlamaIndex: Choose this for building AI assistants that need deep integration with proprietary data, document search and analysis systems, or customer support agents accessing knowledge bases. It's robust for organizations with complex data landscapes requiring sophisticated retrieval.

Security and Scalability: LlamaIndex enables local deployment of models and indexes while maintaining strict control over sensitive data. The llama-agents microservices architecture supports distributed multi-agent systems that scale with business demands.

The Tradeoff: Some indexing methods incur high LLM costs unless properly optimized. Initial setup can be complex for non-technical users. Works best when RAG and data retrieval are central to your use case rather than when it's a general-purpose automation.

CrewAI: Role-Based Simplicity for Team Workflows

CrewAI provides an intuitive, lightweight framework for building multi-agent systems organized like human teams with defined roles and responsibilities. This role-based architecture makes agent coordination feel natural and accessible.

Core Strengths: User-friendly setup with minimal code required to deploy collaborative agents. Sequential and hierarchical execution modes support a range of workflow patterns. The framework emphasizes team-like collaboration where agents have specific expertise and communicate to solve shared goals.

When To Use CrewAI: Select CrewAI when your workflow maps naturally onto roles and responsibilities—researcher, writer, editor, reviewer. It's ideal for content creation pipelines, customer service teams where agents specialize by domain, and business processes with clear task delegation.

Performance Considerations: CrewAI optimizes for speed, executing faster than many alternatives.

The Tradeoff: Less flexibility than graph-based frameworks for non-role-based applications. No native streaming support in some configurations. Best when your use case aligns with its team-oriented model rather than forcing complex logic into role structures.

Amazon Bedrock: Enterprise-Grade Managed Agents

Amazon Bedrock provides fully managed infrastructure for building, deploying, and operating AI agents at scale. The AgentCore platform offers framework-agnostic support, meaning you can use any agent framework while leveraging AWS's enterprise infrastructure.

Core Strengths: Multi-agent collaboration with supervisor agents coordinating specialized teams. The longest runtime in the industry (8 hours) supports complex asynchronous workflows. Complete session isolation ensures security for multi-tenant applications. Built-in memory, guardrails, code interpretation, and retrieval-augmented generation come standard.

When To Use Amazon Bedrock: Choose this for AWS-native enterprises requiring managed infrastructure, organizations with limited AI engineering resources, or teams prioritizing speed-to-production over architectural control. It excels when compliance, security, and observability are critical requirements.

Multi-Agent Innovation: The March 2025 general availability introduced inline agents (dynamic role adjustment at runtime), payload referencing (reduced data transfer costs), and CloudFormation support for reusable agent templates. These features address real production pain points I've seen in consulting engagements.

The Tradeoff: Vendor lock-in to AWS ecosystem. Less customization than open frameworks. Costs can escalate with heavy usage. Best for teams already invested in AWS who value managed services over maximum flexibility.

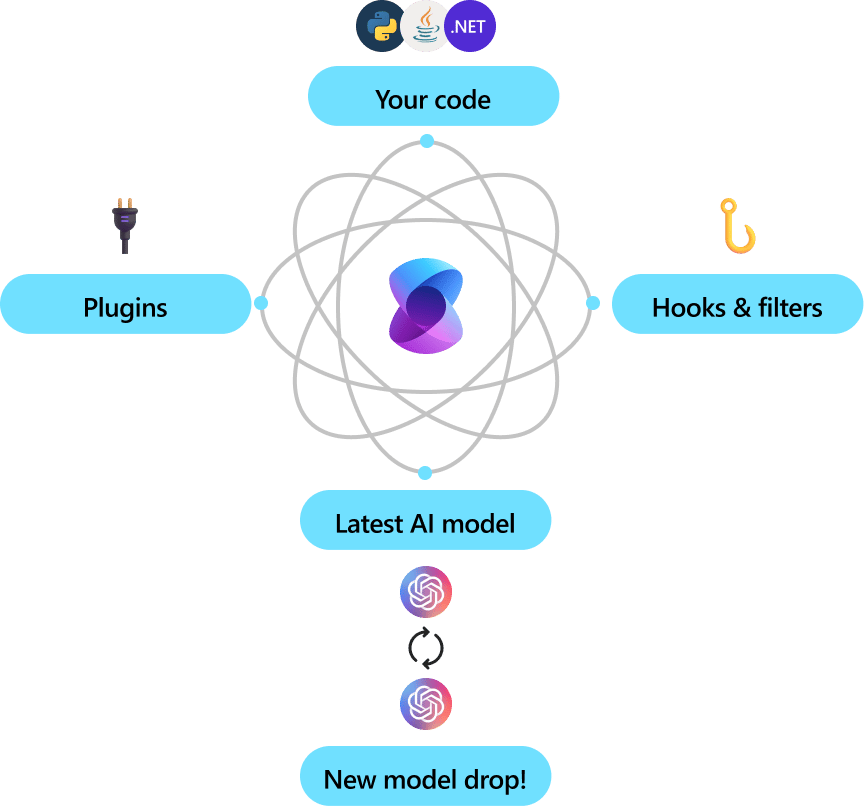

Semantic Kernel: Microsoft's Enterprise Integration Layer

Semantic Kernel is Microsoft's model-agnostic SDK empowering developers to build, orchestrate, and deploy AI agents across .NET, Python, and Java. It emphasizes enterprise readiness with stable APIs, extensive plugin support, and deep Azure integration.

Core Strengths: Flexibility across multiple programming languages and LLM providers. Plugin ecosystem integrates native code, prompt templates, OpenAPI specs, and the Model Context Protocol. Process Framework supports stateful, long-running business processes with human-in-the-loop capabilities.

When To Use Semantic Kernel: Select this for Microsoft-centric enterprises, .NET development teams, or organizations that require multilingual support. It fits scenarios needing tight integration with Azure services, Office 365, or Dynamics.

Microsoft's Agentic Vision: Semantic Kernel reached version 1.0 across all languages, signaling production readiness with non-breaking changes. Microsoft positions it as the stable foundation while AutoGen provides cutting-edge experimentation. The convergence strategy gives enterprises a supported path from innovation to scale.

The Tradeoff: Primarily benefits Microsoft ecosystem adopters. Teams outside .NET/Azure may find better options. The enterprise focus means slower innovation velocity compared to research-driven frameworks.

Emerging Frameworks Worth Watching

Agno (formerly Phidata) emphasizes speed and simplicity with a Pythonic interface for building agents with memory, knowledge, and tools. Benchmarks show Agno agents instantiate 529× faster than LangGraph with 24× lower memory usage. Best for performance-critical applications and teams prioritizing minimal abstractions.

TaskWeaver takes a code-first approach where agents translate user requests into executable Python code. Developed by Microsoft for data analytics, it treats plugins as callable functions and supports rich data structures such as DataFrames. Ideal for data science teams and business intelligence workflows.

Haystack provides a simpler alternative to LangChain with modular components for building search and retrieval pipelines. The Agent implementation integrates tools to enable autonomous task execution beyond static Q&A. Choose Haystack for document search systems, RAG applications, or teams finding LangChain overly complex.

When To Use One Framework Versus Another: Decision Framework

Match Framework to Team Maturity and Resources

Your organization's technical capabilities should drive framework selection more than feature checklists. I've seen teams choose powerful frameworks that languish unused because they lacked the expertise to deploy them effectively.

High Technical Maturity (experienced AI/ML engineers, DevOps infrastructure): Consider LangGraph for maximum control, AutoGen for research-driven innovation, or LlamaIndex for sophisticated retrieval when data integration complexity demands it. These teams benefit from flexibility and can handle steeper learning curves.

Medium Technical Maturity (solid engineering but limited AI expertise): CrewAI offers accessible multi-agent coordination with lower complexity. Semantic Kernel provides enterprise support for Microsoft shops. Haystack simplifies common patterns. These frameworks balance capability with approachability.

Lower Technical Maturity (business-focused teams, limited development resources): Amazon Bedrock, Google ADK, or other managed platforms eliminate infrastructure overhead. You trade architectural control for faster deployment and vendor support. This approach makes sense when AI augments core business rather than defining competitive differentiation.

From my consulting experience, teams often overestimate their ability to manage open frameworks. Honestly assess whether you have dedicated AI engineering resources before committing to complex architectures. Starting with managed platforms and graduating to frameworks as expertise grows often succeeds better than the reverse.

Workflow Complexity Determines Architecture Needs

The nature of your workflows should guide architectural choices beyond team capabilities.

Simple Linear Workflows (straightforward task sequences): CrewAI's role-based model or basic LangChain pipelines suffice. Overengineering with graph-based frameworks adds complexity without benefit.

Branching Logic and Conditional Flows (decision trees, context-dependent paths): LangGraph excels with its graph-based orchestration supporting cycles, branches, and state management. The visual representation naturally maps to complex business logic.

Multi-Agent Collaboration (specialized agents coordinating on shared goals): AutoGen's conversational architecture or CrewAI's team model fit well. Amazon Bedrock's supervisor agents provide a managed alternative for AWS users.

Data-Intensive Retrieval (heavy document search, knowledge base queries): LlamaIndex optimizes specifically for this with advanced indexing strategies and data connectors. Haystack provides a simpler option for standard RAG patterns.

Code Generation and Analytics (programmatic task execution): TaskWeaver's code-first approach, where agents write and execute Python, aligns perfectly.

I currently recommend mapping your top three use cases to framework strengths before broad adoption. Build proofs of concept with different frameworks on representative workflows—real performance often surprises.

Control Versus Convenience: The Open-Closed Spectrum

This fundamental tradeoff shapes every framework decision: Do you need maximum flexibility or faster time-to-value?

Choose Open-Source Frameworks When:

Vendor neutrality matters for strategic reasons (avoiding lock-in, regulatory requirements)

You need fine-grained control over agent behavior and architecture

Custom integration with proprietary systems is critical

You want to run agents on-premise or choose any LLM provider

Building AI capabilities as core competitive differentiation

Choose Closed Platforms When:

Speed-to-production outweighs architectural flexibility

Limited AI engineering resources constrain what you can build

Enterprise support, compliance certifications, and SLAs are required

You're already invested in a cloud ecosystem (AWS, Azure, Google)

AI augments core business rather than defining it

Hybrid Approaches increasingly make sense: Use platforms for commodity functions (customer support, content moderation) while building competitive moats with custom frameworks for strategic capabilities (proprietary workflows, unique data integration).

One healthcare client I advised uses Amazon Bedrock for HIPAA-compliant patient communication agents while developing LangGraph-based clinical decision support with their proprietary medical knowledge base. The hybrid strategy balances speed, compliance, and differentiation.

Advantages, Disadvantages, and Lock-In Risks

Open-Source Framework Advantages

Vendor Neutrality gives you freedom to switch LLM providers as technology evolves. When OpenAI pricing changes or a superior model emerges, open frameworks let you adapt without rewriting your architecture. This matters more as AI capabilities shift rapidly.

Customization and Control enable optimization for your specific use cases. You can modify components, add custom tools, integrate proprietary systems, and fine-tune every aspect of agent behavior. Organizations building AI as a competitive advantage need this flexibility.

Transparency and Auditability matter for regulated industries. Open-source code can be inspected, validated, and certified for compliance requirements that black-box platforms can't meet. Financial services and healthcare particularly value this.

Cost Flexibility allows you to optimize infrastructure spending. Run smaller models for simple tasks, use open-source LLMs where appropriate, and deploy on-premise to avoid cloud costs.

Community Innovation accelerates capability development. LangChain, LangGraph, and AutoGen benefit from thousands of contributors who add integrations, fix bugs, and share patterns. The innovation velocity often exceeds proprietary platforms.

Open-Source Framework Disadvantages

Higher Technical Complexity requires stronger engineering teams. You manage more infrastructure, handle integration challenges, and debug issues without vendor support. Teams underestimate this operational burden, leading to stalled implementations I've had to rescue.

Steeper Learning Curves slow initial deployment. LangGraph demands graph theory understanding. AutoGen requires grasping event-driven architectures. The expertise investment pays off for complex use cases but creates friction for simple ones.

DevOps Responsibility means you handle deployment, scaling, monitoring, and security. While platforms provide built-in observability and managed infrastructure, open frameworks require you to build or integrate these capabilities. Smaller teams struggle with this operational overhead.

Documentation Lag occurs when rapid development outpaces written guides. Fast-evolving frameworks like AutoGen sometimes leave developers navigating community forums for answers. This improves over time but creates friction for early adopters.

Integration Effort varies widely across tools and data sources. While popular frameworks include many connectors, integrating with proprietary systems often requires custom development. Platforms typically offer pre-built integrations to common enterprise software.

Closed Platform Advantages

Faster Time-to-Production accelerates deployment from months to weeks. Amazon Bedrock agents can be configured in "just a few quick steps" according to AWS. For organizations prioritizing speed over customization, this matters.

Enterprise Support and SLAs provide safety nets for production systems. When agents break at 2 AM, having vendor support with guaranteed response times reduces risk. Open frameworks rely on community support and your internal expertise.

Built-in Compliance and Security address regulated industry requirements. Amazon Bedrock includes guardrails, audit trails, and session isolation designed for enterprise security. Building equivalent safeguards with open frameworks takes significant effort.

Managed Infrastructure eliminates DevOps complexity. Automatic scaling, monitoring, versioning, and deployment come standard. Teams focus on business logic rather than operational concerns.

Seamless Ecosystem Integration benefits cloud-native organizations. If you're already on AWS, Bedrock integrates naturally with existing services. Microsoft shops find Semantic Kernel and Azure AI work together smoothly.

Closed Platform Disadvantages

Vendor Lock-In creates strategic risk. Migrating agents built on Amazon Bedrock to another platform requires substantial reengineering. You're dependent on vendor pricing, feature roadmaps, and business continuity.

Limited Customization constrains how deeply you can optimize. Platforms offer configuration options but don't expose the underlying architecture for modification. This ceiling matters less for commodity use cases but limits competitive differentiation.

Higher Long-Term Costs can accumulate with usage-based pricing. While platforms reduce upfront engineering investment, per-request fees scale with success. Organizations processing millions of agent interactions may find open frameworks more economical.

Slower Innovation Cycles mean you wait for vendor feature releases. Open frameworks benefit from rapid community innovation, while platforms prioritize stability over cutting-edge capabilities. This trade-off favors stability for some, but frustrates innovators.

Less Transparency obscures how agents make decisions. Black-box models make debugging harder and create compliance challenges in regulated industries requiring algorithmic explainability.

Lock-In Mitigation Strategies

Organizations should actively plan for vendor independence even when choosing platforms. Here's how:

Abstraction Layers isolate business logic from platform-specific code. Write agents against standardized interfaces that could theoretically swap underlying frameworks. This adds complexity but preserves optionality.

Hybrid Architectures diversify risk by using multiple platforms for different functions. Don't put all agents on one vendor—this maintains negotiating leverage and provides fallback options.

Data Portability ensures you own and control training data, conversation history, and learned behaviors. Platforms that trap your data deliberately increase switching costs.

Open Standards Adoption like the Model Context Protocol (MCP) enables interoperability across frameworks and platforms. MCP-compatible agents can switch between different systems more easily.

From my consulting work, I've found that teams that evaluate lock-in risk upfront make better strategic choices. The cheapest option today often becomes expensive when you're trapped in an ecosystem years later.

Where To Start: A Practical Implementation Roadmap

Phase 1: Assessment and Strategy (Weeks 1-4)

Inventory Current State: Document your existing AI initiatives, technical capabilities, and infrastructure. What LLMs are you using? What cloud platforms? What internal expertise exists? I see teams skip this step and choose frameworks misaligned with their environment.

Define Use Cases: Prioritize 2-3 high-impact workflows for initial agent deployment. Look for tasks that are repetitive, require multi-step reasoning, and currently consume significant human time. Content creation, customer support, and data analysis are typically quick-win areas.

Assess Team Capabilities: Honestly evaluate technical maturity using the earlier framework: high, medium, or low AI/ML expertise. This determines whether you start with open frameworks or managed platforms. Don't let ambition exceed capability—better to succeed with simpler tools than fail with complex ones.

Set Success Criteria: Define measurable outcomes before building anything. What productivity gain justifies the investment? How will you measure agent performance? When do you expand versus pivot? Vague goals lead to abandoned pilots.

Phase 2: Proof-of-Concept (Weeks 5-12)

Select Framework for Testing: Based on your assessment, choose 1-2 frameworks aligned with team capabilities and use cases:

For high technical maturity + complex workflows: LangGraph or AutoGen

For medium maturity + role-based tasks: CrewAI or Semantic Kernel

For low maturity or AWS-native: Amazon Bedrock

For data-intensive retrieval: LlamaIndex or Haystack

Build Narrow but Deep: Focus on one workflow end-to-end rather than surface-level exploration. A working agent that saves 10 hours weekly beats three abandoned demos. This validates both technical feasibility and business value.

Involve Domain Experts: Include the humans currently performing design and testing tasks. Their knowledge of edge cases, quality requirements, and workflow nuances is irreplaceable. Agents augment these experts, not replace them initially.

Measure Everything: Instrument your POC to track task completion rates, time savings, error rates, and user satisfaction. Quantitative data drives expansion decisions and justifies investment.

Phase 3: Production Deployment (Weeks 13-26)

Harden for Reliability: POC code rarely survives production unchanged. Add error handling, retry logic, fallback mechanisms, and monitoring. Amazon Bedrock's built-in observability features help here if using managed platforms.

Implement Guardrails: Define boundaries for agent autonomy—what decisions require human approval, what data agents can access, what actions are prohibited. Start conservative and expand trust as agents prove reliable.

Build Human-in-the-Loop Workflows: Most successful agent deployments keep humans involved for oversight, exception handling, and continuous improvement. AutoGen and Semantic Kernel particularly support these patterns.

Deploy Gradually: Roll out to a limited set of users before the full organization. Monitor closely, gather feedback, iterate quickly. Scaling too fast before validating production behavior creates problems that erode trust.

Establish Governance: Create clear ownership for agent management, security reviews, performance monitoring, and incident response. Agents need operational discipline, as with any production system.

Phase 4: Scale and Optimize (Weeks 27+)

Expand Use Cases: Apply proven frameworks to additional workflows. Learning from the initial deployment substantially accelerates subsequent projects.

Optimize Costs: As usage grows, evaluate LLM selection, caching strategies, and infrastructure choices. The flexibility of open frameworks helps here—switch models for cost-sensitive tasks.

Build Internal Expertise: Invest in training, create internal documentation, and develop reusable patterns. Organizations that build agentic AI capabilities as core competencies gain compounding advantages.

Monitor Competitive Landscape: Agentic AI evolves rapidly. Stay informed about new frameworks, emerging capabilities, and industry best practices. What works in 2025 will evolve by 2026.

Prepare for Multi-Agent Future: Current single-agent deployments pave the way for coordinated multi-agent systems. Design with this evolution in mind—modular architectures make expansion easier.

Bringing It All Together: Your Next Steps

Agentic AI represents the most significant shift in enterprise AI since the introduction of LLMs. The frameworks explored here—LangGraph, AutoGen, LlamaIndex, CrewAI, Amazon Bedrock, Semantic Kernel, Agno, TaskWeaver, and Haystack—each solve different problems for different organizational contexts.

The strategic choice isn't which framework is "best" in absolute terms, but which aligns with your team's capabilities, workflow complexity, and long-term vendor strategy. Organizations with strong AI engineering teams and complex requirements benefit from the flexibility and control that open frameworks offer. Teams prioritizing speed and lacking deep technical resources find value in the convenience of managed platforms. Most enterprises will ultimately adopt hybrid approaches—platforms for commodity functions, frameworks for competitive differentiation.

Three principles guide successful agentic AI adoption from my consulting work:

Start narrow and deep with one high-impact use case rather than broad experimentation. Working agents that deliver measurable value build organizational momentum and justify expanded investment.

Match ambition to capability—choose frameworks your team can actually deploy and maintain. The most powerful technology creates no value if it sits unused because nobody understands how to implement it.

Build for learning and iteration—the first framework you choose likely won't be the last. Design modular architectures that allow framework evolution as your expertise and requirements grow.

The window for competitive advantage through agentic AI is open now. Organizations deploying agents in 2025 aren't just automating tasks—they're building proprietary capabilities in workflow design, agent orchestration, and human-AI collaboration that compound over time. This institutional knowledge becomes the real moat, not the technology itself.

Want to stay ahead of AI trends that actually matter to your business? Join more than 5,000 executives reading First AI Movers Daily Newsletter. Every day, I break down the AI developments that will actually impact your industry—no fluff, just actionable insights. You can also subscribe to First AI Movers Insights for deeper strategic analysis.

About me: My name is Hernani Costa, I'm an AI strategist, fractional CxO, and founder of First AI Movers. I help executives and founders navigate AI transformation without losing their humanity. With a PhD in Computational Linguistics and over 25 years of experience spanning academic research, startup leadership, and AI Senior Consultancy, I've guided dozens of organizations through practical AI implementation while maintaining ethical standards. These days, I'm laser-focused on helping leaders become truly AI-first. Happy to connect with you on LinkedIn. If you're looking for strategic partnerships, please get in touch with me at: info at First AI Movers dot com. And, subscribe to my daily newsletter to receive free daily updates.

Looking for more great writing in your inbox? 👉 Discover the newsletters busy professionals love to read.

For services or sponsorships, email me at info at firstaimovers dot com; or message me on LinkedIn.