In this article, you will find a complete guide to Perplexity Labs — the AI platform turning ideas into apps, dashboards & reports. Tips, examples & best practices for 2025.

1) Overview of Perplexity Lab and Its Capabilities

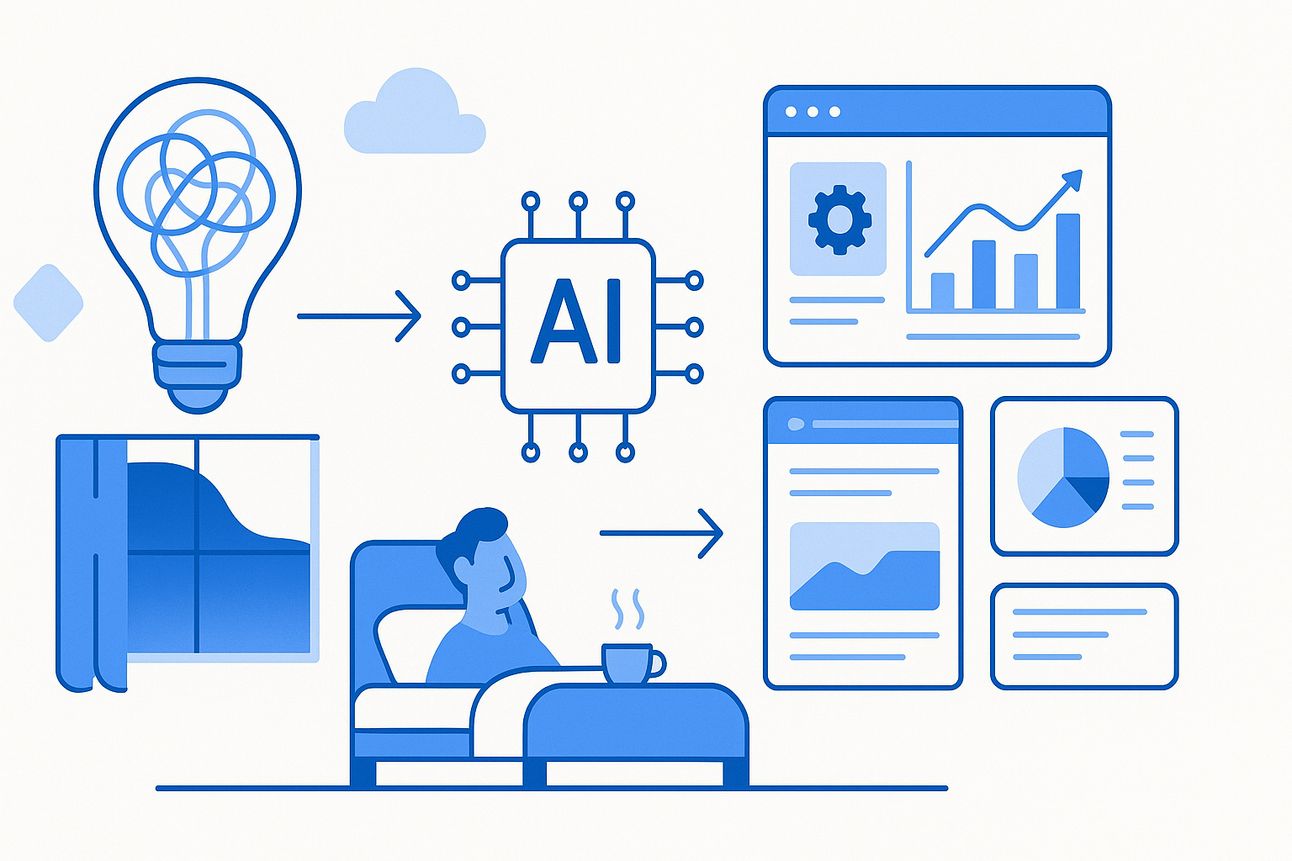

Perplexity Labs (often referred to as Perplexity Lab) is a recently launched feature (May 29, 2025) of the Perplexity AI platform that serves as an AI-driven project development environment. Unlike Perplexity’s standard Search (quick Q&A) and Research (in-depth analysis) modes, Labs is designed to handle complex, multi-step tasks and produce “finished” outputs such as reports, data analyses, code, and even simple web applications. In practice, Perplexity Labs acts like an AI co-developer or “copilot,” capable of taking a high-level prompt and autonomously performing a sequence of actions (web searches, code execution, data visualization, etc.) to generate a comprehensive result.

Perplexity Labs is available to Pro subscribers (at ~$20/month) and comes with a quota of 50 Labs queries per month. Users access it through a mode selector (on web or mobile), then enter a natural-language prompt describing the project or task they want completed. The platform will then orchestrate a workflow: for example, it might research information with live web browsing, write and run code to process data, generate charts or images, and compile everything into a final output. All intermediate outputs (code files, images, CSV data, etc.) are collected in an “Assets” tab for the user to review or download. In many cases, Labs can also present an interactive result in an “App” tab, allowing the user to interact with a generated web app or dashboard directly within Perplexity.

Key capabilities of Perplexity Labs include:

End-to-end Project Generation: It can produce reports, analytical write-ups, spreadsheets, visualizations, slide decks, dashboards, and even working web applications from a single prompt. The system leverages advanced tools (e.g., headless web browsing to gather data, a sandboxed runtime to execute code, charting and image generation libraries) to handle tasks that would normally require multiple software tools or human experts. For example, Labs is capable of writing Python or JavaScript code to manipulate data, executing it, and then embedding the results (such as graphs or computed tables) into the final output.

Multi-Model and Real-Time Data: Perplexity Labs utilizes large language models (LLMs) to drive its reasoning. Pro users can choose from multiple model backends (OpenAI’s GPT-4 “Omni”, Anthropic’s Claude 3.5 variants, etc.) depending on the task. Notably, it provides cited, up-to-date information via web search integration, meaning answers and reports are grounded in real-time data with source citations, which is particularly useful for research-oriented projects. This hybrid of web search and LLM capabilities distinguishes Labs from a standard coding assistant — it’s not only generating code or text, but also pulling in live information as needed.

Time-Extended Workflows: Labs is designed to invest more time per query than the regular Q&A mode. A single Labs session often involves 10+ minutes of AI “thinking” time (and can run up to 30+ minutes for very complex projects) in order to gather information and iteratively build the output. The user can monitor progress step-by-step (the interface may show a “Tasks” or “Steps” view detailing what the AI is doing) and intervene if necessary — e.g., skipping a step or adding an instruction if the AI is going off track. This ensures the user retains some control: Labs is an interactive workflow, not just a one-shot answer generator.

In summary, Perplexity Labs represents a shift toward an “AI project assistant” model: it merges search, coding, and content creation into one interface. This enables turning a high-level idea (like “Analyze my business data and build a dashboard”) into a tangible result with minimal manual effort. Early descriptions from the company pitch it as “having an entire team at your disposal” for complex tasks. By standardizing these capabilities in one tool, Perplexity Labs aims to streamline workflows that developers or analysts would otherwise carry out across many different applications (from Excel to IDEs to browser).

(Release timeline: Perplexity Labs was officially launched in late May 2025, first announced on May 29, 2025. As of June 2025, it is a very new feature — roughly <2 months old — and is rapidly evolving with feedback from its initial user base.)

2) Examples of Applications Developed Using Perplexity Lab

Developers and early adopters have been quick to experiment with Perplexity Labs, building a variety of projects that showcase its capabilities. The official Project Gallery on Perplexity’s website highlights sample applications across domains (education, finance, research, creative, etc.), many of which were generated entirely by Labs from user prompts. Below are a few notable examples of what has been created with Perplexity Lab, along with links or references to these project outputs:

Interactive World War II Map (Education): One user prompt asked for “an interactive map showing the battles of the Pacific theater from Dec 1941–Sep 1945 with summaries of each battle and links to sources.” Labs produced a functional web app: an embeddable map with zoom and a time slider to navigate through battles, each annotated with info and source links. The project runs as a mini web application (HTML/JS/CSS) hosted by Perplexity (on AWS) and demonstrates Labs’ ability to combine historical research with interactive visualization. The code assets for this map (including data and scripts) were made available for download, illustrating that developers can obtain the underlying code generated by Labs. (This project is viewable via Perplexity’s gallery; the code can be exported for further use.)

Financial Portfolio Dashboards (Finance): Perplexity Labs has been used to create analytical dashboards in the finance domain. For instance, a community member (@hamptonism) built a “5-year performance comparison of a traditional stock portfolio vs an AI-driven portfolio” — Labs fetched historical market data, generated comparative charts, and assembled an interactive dashboard highlighting key insights. Another related Labs project involved a “Global Economic Indicator Tracker” that pulls in macroeconomic data from various countries to visualize trends. These examples show Labs leveraging its web browsing and charting tools for data analysis applications — tasks that might involve scraping financial data from the web, using Python/pandas for analysis, then outputting results as graphs and tables. The generated dashboards are not just static images; they often include interactive features (filters, tooltips, etc.), all created by the AI. Developers can take the produced code (e.g. JavaScript chart code or Python scripts) and integrate it into their own systems or refine it further.

Market Research and Business Reports (Startup Use-Case): Labs can aid in business development tasks. In one example, a user prompted: “We are a GenAI consulting firm. Generate a list of 15 potential B2B startup customers (pre-Series B, in the US) that could benefit from AI, with contact info, company summary, location, etc., and present it in a dashboard.” The output was a comprehensive lead-generation report — Labs compiled a list of 15 companies matching the criteria (across sectors like healthcare, manufacturing, cybersecurity), complete with each company’s description, stage, address, and contacts. It even built a dashboard for filtering and highlighting opportunities, and went a step further to draft personalized outreach email templates for each company. This example demonstrates how Labs can automate a substantial chunk of market research and sales prospecting work. A task that would typically require slogging through databases and LinkedIn was distilled into a ready-to-use artifact in one Labs session. A startup could directly use such an output to jumpstart their sales pipeline, effectively turning a Labs project into business value immediately.

Creative Storyboarding and Interactive Content (Creative Arts): Perplexity Labs isn’t limited to data and code — it can generate creative content too. A striking example is a prompt to “develop a short sci-fi film concept in noir style about a 30-year-old female scientist on Mars during a calamity. Create 9 storyboard images and a full screenplay.” Labs managed to produce a complete screenplay titled “Red Dust Conspiracy” along with nine panel storyboard images illustrating key scenes. The output included narrative elements (characters, plot, dialogues) and noir-style descriptions, plus AI-generated images for each storyboard panel. While the quality of the screenplay was described as “coherent and mediocre” by one commentator, the fact that Labs handled both writing and image generation is notable. It showcases integration with image-generation models to produce concept art or illustrations on the fly. This kind of result could be useful for creatives as a first draft: for example, a game designer or filmmaker might use Labs to generate a storyboard and then refine the script and artwork manually. (Indeed, the Project Gallery includes this storyboard example as a reference project.)

Personal Data Analysis and Decision Support (Personal Use): In a more real-estate oriented query, a user asked Labs: “Find areas around New York City with low crime and good schools, under $1M housing, and then identify the 10 best property listings in those areas with a comparison table.” Labs returned a detailed property research report: it chose a few suitable neighborhoods (e.g. parts of New Jersey, Westchester, etc. meeting the criteria), explained their safety and school ratings, and listed 10 specific properties for sale with a comparison table of features (prices, commute times, school scores, etc.). Essentially, it combined crime rate data, school statistics, and live real estate listings to answer a multi-parameter question, all formatted as a readable report. This illustrates Labs’ potential for personal planning applications (home buying decisions, travel itineraries, etc.), where it aggregates public data into a customized recommendation. The output can save users significant research time, and they can act on the information directly (e.g., visiting the recommended listings).

Developer Prototype from Code Repository (Tech Prototype): Some developers are integrating Perplexity Labs with their own coding projects. A noteworthy case from the community: a developer working on an app (called “ThinkRank” for AI content detection) fed his project’s README and code snippets into Labs to see what it would build. The result? Labs generated a functional prototype web app based on the project description, including an executable demo interface, presumably using the code and assets inferred from the GitHub repo. The developer shared the Labs-generated app link and was amazed that “it not only gave a full executive breakdown, but it coded an app and everything based off my README”, calling the tool “mind-blowing”. He made the prototype publicly available and even provided his GitHub repo link (for ThinkRank) so others could see the source. This example is powerful: it suggests that Labs can read and understand existing code artifacts and then extend or utilize them to create something new (in this case, generating a UI and additional code to demonstrate the project). The developer’s next step was to export the Labs output and integrate it back into his development workflow — he mentioned using VSCode and custom prompt engineering to further refine the app beyond what Labs initially provided. This kind of workflow — AI-generated prototype followed by human polishing — could become a common pattern in software development, accelerating prototyping and MVP creation.

(Each of the above examples is drawn from early user reports and the official gallery. Many projects have publicly shareable links on the Perplexity Labs gallery. Developers can also download project assets or export the entire project (e.g., to PDF or other formats) for use outside Perplexity. In some cases, code and content generated by Labs have been uploaded to GitHub or shared via blogs, as seen with the ThinkRank project.)

3) Community Insights and Discussions Around Perplexity Lab

The developer community’s response to Perplexity Labs has been a mix of enthusiasm for its potential and constructive criticism of its limitations. Given the feature’s newness, many users are actively sharing their experiences on social media (Reddit, Twitter, LinkedIn) and in developer forums. Here are some key insights and discussion points from the community:

“Game-Changing” Productivity — but Early Days: A common sentiment is that Labs showcases a step-change in what AI can do for workflow automation. Users have described their first hands-on experiences as “genuinely impressive” and even “mind-blowing”. For example, one LinkedIn user reported that tasks which “once took hours of manual research and formatting” were completed by Labs in under 10 minutes, calling it a “game-changer” (while noting it’s still an early product). On Reddit, an excited user who built multiple apps with Labs exclaimed, “Perplexity Labs is INSANE!” after witnessing the tool generate a full working app from his project files. Many developers express amazement at how Labs can combine abilities (coding + searching + writing) that previously required juggling several tools. The tone of early discussions is optimistic, with developers brainstorming how it could speed up prototyping, data analysis, or reporting tasks in their jobs.

Examples Fueling the Buzz: The availability of the Project Gallery and people sharing concrete examples has helped convince skeptics. Seeing a live demo (like the WW2 map or a live dashboard) often elicits a “wow, it actually did that!” response. In community channels, users are posting their own Labs project outcomes — ranging from useful business tools to quirky experiments — which in turn inspires others. This “show and tell” dynamic is creating a small but growing community of Perplexity Lab builders. It’s notable that the Labs subreddit has users discussing not just what they built, but how Labs went about it (the series of steps it took), since the interface allows you to inspect the task-by-task process. This transparency is helping users learn prompt techniques from each other’s projects.

Learning Curve and Prompting Challenges: Despite the excitement, developers have identified pain points. The most cited limitation is the difficulty of making follow-up edits or iterative refinements to a project within Labs. As one Reddit user succinctly put it: “The biggest problem with Labs is that it doesn’t handle follow-ups very well. It basically requires you to be a one-shotting ninja.”. In other words, the initial prompt largely determines the outcome — if something is wrong or missing in the result, you can’t easily have a back-and-forth dialog to fix it (at least in the current version). Labs sessions do allow some user control (you can insert an instruction or abort a step), but there is not yet a smooth conversational refinement like one might have with ChatGPT. This means prompt engineering upfront is crucial, and some users find it challenging to anticipate everything the AI needs to do in one go. As a best practice, users are sharing tips on writing very clear, detailed prompts for Labs to get the desired outcome (more on this in Section 5).

Accuracy and Reliability Concerns: Given that Labs pulls live data and generates content autonomously, users have been scrutinizing the accuracy of its outputs. Early feedback indicates that while Labs often succeeds in creating the requested output, the details sometimes need verification. For example, a user noted issues with how Labs filtered data in a table (some irrelevant data points weren’t fully filtered out, and a few values looked incorrect), suggesting that not every AI step is perfect. In The Register’s review of a Labs-generated sci-fi script, the result was deemed “coherent” but somewhat bland, implying that creative outputs might lack flair (an expected trade-off when an AI writes a movie script). Takeaway: Developers appreciate that Labs provides source citations and intermediate data, which helps with trust, but they caution that one should review critical outputs (like financial analyses or code) before using them in production. Bugs in generated code or slight data mismatches can occur, so a human in the loop is still important for now.

Integration and Exporting Issues: The community has also discussed the challenge of integrating Labs into existing workflows. By design, Labs outputs are contained within the Perplexity interface, which is great for quick deployment (e.g., the app is instantly hosted for you to test). However, developers who want to take the output and continue development elsewhere have to manually export assets. A Reddit user who built three apps noted surprise that “the apps don’t come as downloadable zip files… instead, they’re hosted on Amazon servers and load in a webview”. While all the files are accessible in the Assets tab, there is currently no single-click “export project as ZIP” (you can download files individually or copy code). Some hackers have found ways to scrape the assets or use the export-to-PDF for documentation, but the process could be smoother. This is seen as a temporary friction — the Labs feature is expected to improve with more export options and perhaps direct GitHub integration in the future (users have started voicing such feature requests). In fact, community “feature wishlists” include: better code editing within Labs, version control, and easier hand-off of code to local environments.

Limits and Pricing: Since Labs is paywalled and limited in queries, some discussion revolves around who should use it. The 50 queries/month cap is ample for occasional projects or prototyping, but power users and teams worry it might be restrictive if one tries to use Labs heavily. Enterprise developers note the lack of official integration with development pipelines or APIs (Labs is mainly a UI feature at the moment; the Perplexity API does not yet expose the full Labs automation). These factors mean that large companies are still just testing Labs rather than adopting it at scale. Some comparisons have been made with alternatives — e.g. people compare Labs with OpenAI’s Code Interpreter (a.k.a. ChatGPT’s Advanced Data Analysis) or Microsoft’s Copilot. The consensus is that Labs is more structured and research-oriented (with citations and multi-step autonomy) whereas something like Code Interpreter allows more free-form Python coding in a notebook style. Each has its niche, and developers are experimenting to see which tool fits which use case best.

Overall, the community buzz portrays Perplexity Labs as high-potential but maturing. Developers are impressed with what it can do even in version 1, and they’re actively discussing workarounds and improvements. There’s an atmosphere of collective learning — as more projects get shared, the community is figuring out how to best leverage this new kind of AI tool. And importantly, feedback from these discussions is likely feeding back to Perplexity’s team (the company has been active on their Reddit and Discord), meaning many of the pain points (follow-up queries, export features, etc.) are probably being worked on. In essence, early adopters see Labs as a glimpse of the future of AI-assisted development, and they’re eager to push its boundaries while acknowledging its current limits.

4) Tools and Technologies Used in Conjunction with Perplexity Lab

Perplexity Labs doesn’t exist in a vacuum — it both integrates various technologies under the hood and is used alongside other tools by developers. Here we outline the key tools, frameworks, and technologies associated with Labs, whether built-in or supplementary:

Multiple LLM Backends: Labs leverages large language models to drive its reasoning and generation. Uniquely, it allows the user to select from several model options. According to The Register, Perplexity Labs lets users choose from “OpenAI’s GPT-4 Omni, Anthropic’s Claude 3.5 (Sonnet and Haiku), among others”. This model diversity is unusual (ChatGPT, for instance, only uses OpenAI models). Developers can pick a model based on the task — e.g., GPT-4 for complex coding or analysis, or Claude for faster narrative generation — giving flexibility in output style and speed. All these models are accessed via Perplexity’s interface; the heavy lifting is done on Perplexity’s servers.

Model Context Protocol (MCP) and Autonomous Agents: Under the hood, Perplexity Labs implements an agentic AI workflow. It uses a standardized architecture akin to the “Model Context Protocol (MCP)” (an approach introduced by Anthropic in 2024) to manage multi-step tasks. In simple terms, MCP allows the AI to self-manage context and tools, deciding what actions to take (search, code, etc.) and iterating until completion. This is comparable to how frameworks like LangChain or OpenAI’s Function Calling work, where an AI agent can plan and execute functions. Labs’ integration of MCP means it’s essentially a full-stack AI agent platform, coordinating between the LLM and various tools seamlessly. Developers interested in the technical side note that this is what enables Labs to be an “AI OS” that merges search + code + data in one continuous process.

Headless Browser and Web Scraping: One of Labs’ primary tools is a built-in web browsing capability (often referred to as “deep web navigation”). When a prompt requires information not readily available, Labs can launch a headless browser to search the web and scrape content. It then feeds relevant text back into the LLM for analysis or inclusion in results. This is powered by Perplexity’s search engine and likely a web-scraping stack. For developers, this means Labs can act like an integrated scraper — no need for external tools like BeautifulSoup or Scrapy for many tasks, since Labs will grab data for you. (However, note that this browsing is read-only; if an app requires interaction with external APIs or logging into sites, Labs might be limited — currently it sticks to publicly available info.)

Code Execution Environment: Another crucial component is Labs’ code interpreter. When a task involves data analysis, calculations, or generating an interactive app, Labs will write code (in languages like Python, JavaScript, or SQL) and run it behind the scenes. This happens in a sandboxed environment on Perplexity’s servers. The Register article indicated that for the interactive map project, Labs generated Python, JavaScript, CSS, and JSON assets and executed them to build the app. We can infer that the environment likely includes common libraries (for example, Python’s pandas or matplotlib for data, or D3.js for JavaScript charts) so that the AI can produce rich outputs. Essentially, Labs has a mini cloud IDE — similar to Jupyter Notebook or OpenAI’s Code Interpreter — where it can compile and run code on demand. Developers can download the code later, but during the Labs session, it’s all handled automatically.

Data Visualization and Image Generation Libraries: Perplexity Labs can create charts, graphs, maps, and other visual content as part of its outputs. It mentions using “chart and image creation” tools. For charts, it might use libraries like Plotly or matplotlib (if Python) or Chart.js/D3 (if web). For images, Labs can tap into AI image models (perhaps via stable diffusion or DALL·E APIs) to generate illustrations or photos based on context. In the storyboard example, Labs produced noir-style images, suggesting it has access to a generative image model pipeline. All of this is abstracted away from the user — you just see the resulting PNGs or SVGs in your Assets. Developers who need custom visuals might still use their own tools later, but Labs’ ability to automatically visualize data is a huge time-saver for quick reports.

Standard Web Tech (HTML/CSS/JS) and Hosting: The front-end of Labs-generated applications is typically standard web technology. As one user noted, “the stack is just the usual web tech like HTML, CSS, JavaScript, Python, and others; so web developers can jump right in.” This means if Labs builds a mini website or dashboard, it’s delivered as normal web files, which can be opened and edited with any editor. Perplexity temporarily hosts the apps on AWS for user convenience, but developers can take that code and deploy it on their own servers if desired. Knowing that Labs uses conventional frameworks (no proprietary file formats) gives developers confidence that they can extend the AI’s output, for instance, by plugging the HTML into a larger React application or incorporating the Python code into a larger project. It’s complementary rather than a closed system.

Perplexity API and Playground: Outside the Labs UI, Perplexity also offers an API and a “Playground” (labs.pplx.ai). Currently, these are more for the LLM Q&A and search features, not full Labs projects. However, advanced users are exploring ways to use the API in tandem with Labs. For example, one could imagine using the API to run smaller subtasks or to integrate Perplexity’s search answers into their app, while using Labs via the UI for heavy project generation. There’s also mention of “Internal Knowledge Search” for enterprise (allowing Perplexity to search company documents). A company could combine that with Labs to have the AI work on internal data, though this is an enterprise feature requiring some setup. In general, as Labs matures, we expect more integration points (perhaps a future API for Labs itself). For now, most “conjunction” use means exporting Labs outputs to other dev tools (like VSCode, Jupyter, etc.) as described earlier.

External Tools and Custom Workflows by Users: Developers have begun to bring Labs into their toolchain creatively. For instance, the Reddit user clduab11 combined Labs with his own prompt-engineering framework and VSCode: he used Labs to get a prototype, then fed that output into his coding environment to iterate further. Others have discussed using Labs output as a starting point and then employing frameworks like Django or React if they want to turn the prototype into a full application. There is also interest in using Labs alongside orchestration frameworks like LangChain or RPA tools — basically letting Labs handle the high-level project creation and then chaining it with other automation. While such hybrid uses are nascent, they highlight that Labs is fitting into a broader ecosystem of AI and developer tools.

In summary, Perplexity Labs builds on a stack of AI and software tools behind the scenes (LLMs, browsers, code sandboxes, etc.) and outputs results in standard formats that developers can further manipulate. It is both a self-contained platform and a complement to traditional development: you can use it to offload work, then take the output into whatever frameworks or environments you normally use. The fact that it speaks the “language” of web dev and data science (HTML, JS, Python, CSV, etc.) makes it relatively easy to integrate Labs-generated artifacts into real-world projects or business workflows.

5) Best Practices and Tips for Using Perplexity Lab

Based on the current developer experiences, several best practices are emerging to get the most out of Perplexity Labs. If you’re planning to use Labs for your projects, consider the following recommendations:

Craft a Detailed Initial Prompt: Because Labs works best as a one-shot project builder (with limited follow-up questions), spend time writing a clear and specific prompt that outlines exactly what you need. Include the context, desired outputs, and any constraints in your initial request. For example, instead of asking “Analyze my sales data,” specify “Analyze my sales CSV (attached) for quarterly trends and generate charts plus a summary report.” The more guidance you give up front, the more likely Labs will hit the mark. Users humorously note that Labs currently requires you to “be a one-shotting ninja” in prompt formulation — so anticipate the steps and results you expect, and describe them in the prompt. This reduces the need for iterative corrections.

Leverage the Project Gallery and Templates: If you’re unsure how to phrase your request, look at the examples in Perplexity’s Project Gallery for inspiration. There you can find prompts that worked for others (e.g. how to ask for a dashboard vs. a presentation). It’s often effective to borrow the structure of an existing example and adapt it to your needs. For instance, if you see a “create a dashboard for finances” prompt, you might model your prompt similarly for your own data. Using these community-proven prompts as templates can dramatically improve your outcome. The gallery essentially serves as a set of templates or recipes — take advantage of it.

Keep an Eye on the Process (and Intervene if Needed): When you run a Labs session, monitor the Tasks/Steps that it executes (Labs will usually show a running log of actions like “Gathering data… Generating code… Executing code…”). If you notice it doing something irrelevant or if it’s stuck, use the controls provided: you can pause or cancel the run at any time. You can also insert clarifications on the fly — for example, if you realize you forgot to specify a detail (say, the format of a report), you might try adding an instruction in the middle of the run. Labs does allow some mid-course corrections, although not full Q&A style interaction. Staying engaged with the process helps ensure the final output aligns with your intent, rather than treating it as a black box. Think of it as managing an autonomous intern: supervision can improve the results.

Validate and Refine the Outputs: Treat Labs’ output as a first draft or prototype. Before deploying it or presenting it as final, validate the content. If it’s code, skim through the code for any obvious logical errors or security issues (Labs might not handle edge cases perfectly). If it’s data or analysis, cross-check critical figures with a quick manual calculation or ensure sources cited indeed back the claims. Users have noted minor mistakes (like slight mis-filtering of data) in some cases, so a bit of QA on your part is wise. After validation, you can refine the output further: e.g., format the report to your liking, or enhance the generated app’s UI/UX using your own coding skills. Labs gives you a big head-start, but polishing the last 10–20% can elevate the work from good to great.

Export Assets and Integrate with Your Workflow: Once a Labs session is complete, make use of the Assets tab to download any files you need. If an interactive app were created, you could download the HTML/CSS/JS and host it yourself or merge it into a larger project. If charts or images were produced, you can download those for inclusion in presentations. Perplexity Labs also offers an Export option (to export the entire answer in various formats like PDF, Markdown, etc.) — this is useful for sharing the results with others. For example, you could export a research report to PDF and send it to your team, or export code to a text file for editing in VSCode. One pro-tip from early users: if you plan to do additional development on a Labs-generated app, import the code into a version control system (like git) immediately. That way, you can track changes you make on top of the AI’s code. In short, don’t leave the outputs locked in Labs — extract them and build on them using your normal tools.

Mind the Query Limits (Plan Your Usage): With the 50 Labs queries/month cap for Pro users, it’s important to use your queries wisely. Each new Labs prompt or follow-up counts, so before you hit “Go,” double-check that your prompt is complete. It can help to combine related tasks into one Labs session if feasible, rather than splitting them into separate sessions. For example, ask for a report that includes both analysis and a slide deck in one go, instead of two separate queries. If you do run low on queries, you might wait until the monthly reset or consider if the task can be accomplished in the standard Research mode as a fallback. Additionally, note that really large projects can consume time — Labs can run for up to half an hour or more on complex tasks. If you have something very time-sensitive or iterative, you might not want to burn a query on an experiment. Plan, and use Labs when they provide the most leverage (those tasks that would take you many hours).

Choose the Right Model for the Task: Since Labs allows model selection (when applicable), pick the model that best fits your project. For coding-intensive projects, OpenAI’s GPT-4 (Omni) is known to be strong in the correctness of code. For summarization or text-heavy reports, Claude might be faster or more verbose. The differences aren’t always huge, but power users suggest that model choice can influence style and speed. Also, ensure you have the “Browser” tool enabled in Labs if your task needs web data — by default it is, but just be conscious that if you don’t need web search (say your data is fully in an attached file), sometimes disabling external browsing can make the process quicker and more focused.

Security and Privacy Considerations: If you’re using Labs with proprietary or sensitive data (like uploading a company CSV), remember that this data is being processed in Perplexity’s cloud. The company has a privacy policy, but you should avoid inputting highly confidential information unless you trust the service and perhaps have an enterprise agreement. On the flip side, when Labs writes code, give a quick look to ensure no security vulnerabilities (especially if you plan to deploy the generated app). For instance, if Labs sets up a simple web form, you might need to add validation or security checks before using it in production. These are standard precautions when incorporating AI-generated code or content.

Stay Updated and Engage with the Community: Perplexity Labs is evolving rapidly. New features and fixes are likely to roll out based on user feedback. It’s a good idea to follow Perplexity’s updates (their Discord, Reddit, or blog). For example, developers have requested features like easier project export and improved follow-up interactions — such enhancements could appear soon. By staying in the loop, you can adapt your usage to new capabilities (perhaps Labs will lift query limits or add collaboration features, etc.). Also, engage with fellow users: if you encounter a challenge, chances are someone on the Perplexity subreddit or Discord has seen it too and might have a workaround. The community can be a valuable resource for tips (e.g., how to prompt for a specific format, or how to interpret a certain error Labs gave). In essence, treat this as being part of a beta community — your input and learning from others will help you get the best results and shape the future of the tool.

Use Labs to Accelerate, Not Replace, your Development: Finally, a philosophical best practice: use Perplexity Labs to do the heavy lifting of tedious work, but you steer the project. The ideal workflow is to let Labs handle the grunt work (researching info, boilerplate coding, initial drafts) and then you apply your expertise to refine and customize the output for your specific needs. As one marketing blogger put it, Labs is like “the most overachieving intern ever” — it will give you a comprehensive draft in minutes. However, you are still the lead developer/analyst who ensures the final product is correct, polished, and aligned with business goals. Used in this way, Labs can dramatically boost your productivity and even enable small teams to accomplish tasks that previously required larger staff or more time. Embrace it as a powerful assistant, and pair its strengths (speed, breadth) with your human strengths (judgment, domain knowledge, creative fine-tuning) for the best outcomes.

By following these best practices, developers and professionals have been able to harness Perplexity Labs effectively, producing everything from client-ready reports overnight to functional app prototypes that jump-start development. As the tool and its community continue to grow, these guidelines will no doubt be refined, but they offer a strong starting point for anyone looking to ride the wave of this new AI-driven development paradigm.

Sources: The insights and examples above were gathered from official announcements and documentation as well as community discussions by early users of Perplexity Labs. Key references include the InfoQ news piece introducing Perplexity Labs, a detailed hands-on review on Analytics Vidhya, analysis from The Register’s article on Labs, the Perplexity Labs Help Center/FAQ, and multiple user-generated posts on Reddit and blogs sharing real-world usage experiences. These sources are cited throughout the report to provide further detail and evidence for each point.

Enhancing your development workflow with Perplexity Labs is just the beginning. Ready to fully unlock the capabilities of this groundbreaking AI platform? Get into how Perplexity is reshaping research, search, and application development with these essential reads:

Perplexity vs. ChatGPT: The Best Tool for Research and Fact-Checking

Understand why Perplexity might be your go-to AI assistant for accuracy, citation, and reliable insights compared to ChatGPT.Perplexity Labs in Action: Real-World Project Examples

Explore diverse examples of applications and prototypes developers are creating with Perplexity Labs, showcasing its practical capabilities.Perplexity vs. Google Search: Which Should You Choose?

Discover why Perplexity's AI-powered search can outperform traditional search engines, and when it's best suited for your workflow.Maximize Your Research with Perplexity’s Deep Research Mode

Learn powerful tips to get the most comprehensive and insightful results from Perplexity's advanced multi-step research capabilities.Perplexity Labs vs. AutoGPT: Choosing the Right AI Assistant

Dive into an in-depth comparison of Perplexity Labs and AutoGPT to determine which autonomous AI fits your project’s needs.Harnessing Perplexity’s Focus Modes for Precise Search

See how targeted search modes can streamline your results and enhance the relevance and accuracy of your queries.What is Perplexity Labs? Your Guide to Advanced AI Creation

Get an essential introduction to Perplexity Labs and its transformative potential for automating complex, multi-step tasks.Deep Dive into Perplexity AI's Deep Research Mode

Find out how Perplexity’s deep research capabilities can automate hours of research into concise, expertly summarized insights.

Give yourself the cutting-edge knowledge you need to stay ahead—start exploring today!

by Dr. Hernani Costa, First AI Movers Pro