Welcome to a special deep-dive edition where we tackle one of AI's most fundamental yet misunderstood concepts: tokens. If you've ever wondered why ChatGPT sometimes "forgets" earlier parts of your conversation, or why some AI tools charge different rates for similar tasks, the answer lies in understanding token limits.

Today, we're breaking down everything you need to know about tokens, pricing models, and when to leverage massive context windows versus standard models. Whether you're a developer optimizing costs, a business leader evaluating AI investments, or simply curious about how these systems work under the hood, this comprehensive guide will give you the clarity you need to make informed decisions.

Let's demystify the economics and mechanics behind AI's memory system.

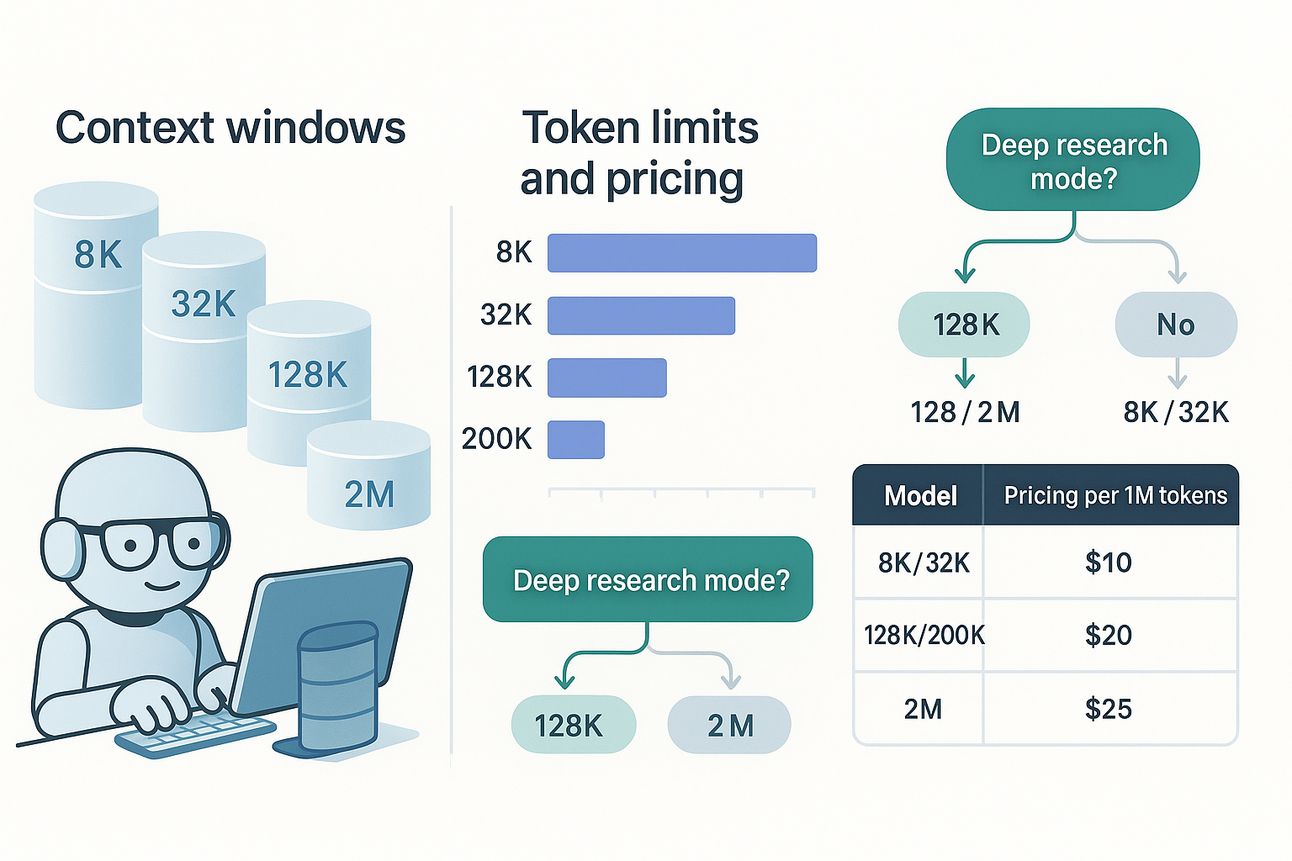

Large language models (LLMs) process text in pieces called tokens. You can think of tokens as chunks of words or characters — the basic units of a model’s short-term memory. Every prompt you send, plus the model’s reply, must fit within a fixed context window (the token limit). Below, we address three common questions about token limits, token pricing, and when to leverage very large context windows (sometimes called “deep research” mode). This FAQ is written for a broad audience — developers, business decision-makers, and curious readers alike — with notes for each perspective where relevant.

What is a token, and what does a token limit mean in practice?

Answer: A token is a snippet of text (often a word or part of a word) that a model uses for processing language. An LLM’s context window is the maximum number of tokens it can handle at once (including both the input prompt and the output). In simple terms, the context window is the model’s working memory or short-term conversational memory. A larger window means the model can “remember” and attend to more information in one go. In comparison, a smaller window means it can handle only shorter prompts or conversations before it forgets or loses earlier context.

For curious readers: Imagine you’re reading a book and can only keep a certain number of pages in mind at once — that number of pages is like the token limit for an AI. If the conversation or document exceeds that length, the model can’t consider the extra text unless earlier parts are dropped or summarized. A bigger token limit lets the AI consider more context at once, making its responses more detailed and relevant when dealing with long inputs.

For business users: The token limit determines how much information you can feed the model in a single query. For example, a model with a 100K token window could take in hundreds of pages of a report or multiple documents at once. This is useful for tasks like analyzing long contracts, entire knowledge bases, or lengthy conversations without breaking them up. It can improve the quality of insights since the AI sees all the relevant info at once, reducing the need for you to manually chunk data. In short, a larger context window can unlock more complex use cases (summarizing large documents, exhaustive Q&A, etc.), potentially saving time and effort in data processing. However, larger context models may be more expensive and slightly slower (addressed more in Question 3).

For developers: The token limit is a hard cap — if your input plus the output exceeds this number, the model will either truncate the input or fail to continue the response. This means developers must design prompts and conversations to stay within the limit. In chat systems, earlier messages might have to be dropped when the history grows too long (some chat interfaces do this automatically in a first-in-first-out fashion). A practical tip is to monitor token usage in your application and summarize or omit irrelevant details once you approach the limit. Also, different models have different limits, so choose one that fits your use case. For instance, some models now offer huge context windows that can even hold entire books or codebases in memory at once.

Examples of context window sizes in popular models: Modern AI models vary widely in how many tokens they support in a single prompt. Here are a few examples:

OpenAI GPT-4 Turbo: Up to 128,000 tokens in the context window (enough to fit ~300 pages of text). This is a major increase from earlier 8K or 32K token versions.

Google Gemini 1.5 Pro: Standard 128,000 tokens; with an experimental setting, it can handle 2 million tokens (2M) in private preview. Google announced that a 2M-token context is available for select developers, which is currently the longest of any major model.

Anthropic Claude (Claude 3 family): Ships with a 200,000 token window by default (about 500 pages of text). Anthropic has hinted that their models can accept over 1 million tokens and may offer million-token contexts to certain enterprise partners who need it.

In summary, the token limit defines how much content the AI can take into account at once. It’s like the capacity of the model’s notepad: anything beyond that size won’t fit unless you clear or condense what’s already written. Larger notepads (contexts) let the model work with more information simultaneously, which can be incredibly powerful — but they come with cost and performance considerations, as we explore next.

How is token usage measured and priced? Is “1 million tokens” a standard unit for pricing?

Answer: Yes — in the AI industry, usage is often measured in tokens, and providers commonly quote prices per large token quantities (such as per 1,000 tokens or per 1,000,000 tokens). Lately, pricing has gravitated toward a “per million tokens” unit, which you can think of as analogous to a unit like a kilowatt-hour in electricity or a mile in distance. It provides a standardized way to estimate costs for a given amount of AI usage. For example, if a model’s rate is $15 per million tokens, you know that feeding 1 million tokens of text (plus receiving output) would roughly cost $15.

For curious readers: Why tokens matter for cost: AI models don’t think for free — each token processed (whether in your prompt or in the AI’s response) consumes computing power. Providers charge for this consumption. You can imagine tokens like cell phone minutes or data bytes in an internet plan: using more means paying more. One million tokens is just a convenient chunky unit (on the order of a large novel’s worth of text) to quote prices. For a rough sense, 1 million tokens might equal about 750,000 words (perhaps 8–10 novels or 2,500–3,000 pages of text in English). It’s a big chunk of content! If an AI can handle that in one go, providers will price it accordingly.

For business users: Pricing examples: Different companies have different pricing, but they often can be compared on a per-million-token basis. For instance, Anthropic’s Claude 4 models (2025 generation) are priced at about $3 per million input tokens and $15 per million output tokens for the standard tier (Claude “Sonnet 4”). More powerful versions like Claude “Opus 4” cost around $15 per million input and $75 per million output. This means if your query plus the answer totals 1,000,000 tokens (which is an extreme case), it might cost $3 if those were all input, and if the model generated 1,000,000 tokens of answer (also extremely large), that could be $15 for the output part — usually you’ll have a mix of both. OpenAI similarly quotes prices per million tokens now. As of mid-2025, the new GPT-4.1 model costs about $2.00 per 1M input tokens and $8.00 per 1M output tokens. These are essentially bulk rates: you could also say $0.002 per 1,000 input tokens, but at the scale people use these models, per-million makes the numbers easier to read.

Why per million? Once models started handling context in the hundreds of thousands of tokens, discussing cost per 1,000 tokens (kilotoken?) started to feel too granular. Think of it like quoting cloud storage in gigabytes instead of megabytes once usage grows. One million tokens has become a de-facto unit on many pricing pages — much like meters or miles are standard units for distance. It doesn’t mean you have to use a million tokens at once; it’s just a convenient benchmark. For smaller usage, you’d prorate it (e.g., 100K tokens at $3 per million would cost about $0.30).

For developers: Implications for budgeting: Always be mindful of how tokens translate to dollars. If your application sends large prompts or gets long answers, those tokens add up. For example, using OpenAI’s GPT-4 Turbo at 128K context, the price was roughly $0.01 per 1K tokens for input and $0.03 per 1K for output. That’s $10 per million input tokens and $30 per million output tokens — so a hefty prompt with, say, 50K tokens and a 5K token answer would cost about $0.55 (because 55K total tokens ≈ 0.055 million, times ~$10-$30 per million depending on in vs out). These costs multiply with usage at scale or with extremely large contexts. As a developer, you should implement measures to optimize token usage: trim unnecessary text, use shorter formats, and consider techniques like caching repeated content (some platforms even offer automatic “context caching” where the API doesn’t bill you twice for the same static content if used across multiple calls). Token counting utilities are available to estimate usage before you send prompts, so utilize those to avoid surprises. Overall, treat tokens as a valuable resource — much like API bandwidth — that you pay for in proportion to how many you use.

When should I use a model with a very large context window (“deep research” mode) versus a standard model, and what are the trade-offs?

Answer: Using models with ultra-large context windows (hundreds of thousands or even millions of tokens) can be incredibly powerful for certain tasks, but it’s not always the best choice for everyday use. There are trade-offs in speed, cost, and even accuracy to consider. Here’s a breakdown of when to use one versus the other, and what it means for different users:

Use large-context models when… you truly need to feed huge amounts of information or maintain a very long conversation without losing earlier context. For example, analyzing a lengthy financial report, feeding an entire codebase to get a code review, or conducting a Q&A over several chapters of a book in one go. These scenarios benefit from the AI having all the data at once. With a 1–2 million token window, you could theoretically input the text of 8 novels or 200 podcast transcripts at once! This capability can simplify workflows: Google researchers noted that with a 2M-token model like Gemini, you might skip building a complex Retrieval-Augmented Generation (RAG) pipeline — instead of retrieving pieces of text from a database, you can dump everything relevant into the prompt and let the model handle it. In other words, “deep research mode” (huge context) lets the model read and reason over vast content in a single session, which can yield very comprehensive answers or allow multi-step reasoning with all facts on hand.

Use standard/smaller-context models when… your task can be handled with less data at a time, or when cost and latency are concerns. If you only need to ask a straightforward question or analyze a short document, a smaller context (say 4K, 16K, or 32K tokens) model is usually far more efficient. It will respond faster and cost significantly less. For example, asking a chat model to summarize a 5-page article doesn’t require a 100K-token context model — a 16K model would handle it fine. Likewise, many conversational applications (chatbots, simple Q&A) rarely need beyond a few thousand tokens of context (just the recent dialogue and maybe a small knowledge snippet). Using an ultra-large context model in such cases would be overkill, like renting a cargo truck to deliver a single shoebox. In summary: save the big guns (million-token models) for when the problem inherently involves very large inputs or very long chains of dialogue/analysis.

Key implications and considerations:

For curious/general users: Bigger isn’t always better for answers. A model with a million-token memory sounds amazing (and it is for heavy tasks), but if you only chat about everyday topics, you won’t notice a difference except possibly a slower response. In fact, extremely large contexts can introduce a bit of noise — the model might include irrelevant details from the huge input if not prompted carefully. Also, keep in mind that the response length is also limited by tokens; a model might read a million tokens but still only output, say, a few thousand at a time. Use high-context models when you need the AI to “read” a lot of material or maintain a long history. Otherwise, a faster, cheaper model with a smaller window is usually sufficient and more cost-effective.

For business decision-makers: Consider cost-performance trade-offs. Large context models open up new use cases — for example, an analyst bot that you can feed your entire quarterly financials and legal documents into, and then query for insights. This could replace or accelerate manual research work. However, each run can be expensive. Longer processing also means higher latency: an employee might wait 30 seconds or more for a result when querying a huge report, versus a few seconds on a smaller model with a targeted query. There’s also an accuracy aspect: providers like Anthropic have worked on improving long-context recall, but models can sometimes lose precision or “forget” details when the context is extremely large (though this is improving with new techniques). As a business, you should use large contexts for high-value analyses where the breadth of information justifies the cost. For routine queries, it might be more economical to use a smaller context and, if needed, design a retrieval system (like a vector database + smaller LLM) to handle large data. In fact, there’s a balance between building retrieval pipelines vs. just using a giant context. The break-even point depends on your scenario: if you have experts to set up a retrieval system and your queries only need small slices of data, that can save money. If you lack that infrastructure or need ad-hoc deep dives, paying the model to ingest everything may be faster to implement, albeit at a higher per-query cost. Also note, some vendors offer enterprise features like prompt caching — meaning if you repeatedly query the same large document, you don’t get charged every time for the whole thing, which can mitigate the costs of large contexts in ongoing use.

For developers: Architecture and performance: Using a model with, say, 100K+ token context can simplify your application architecture (no need for external knowledge stores or chunking logic — just feed the raw data/document into the prompt). This is great for prototyping or when dealing with varied, unstructured data. However, be mindful of increased processing time and memory. More tokens = more work for the model = slower responses and a greater chance of hitting rate limits. Note that with long contexts, you’ll see increased processing time, higher latency, and higher inference cost per call. You might need to design your system to handle that delay (e.g., show a “processing…” spinner to users) and possibly throttle how often such large requests are made. From a development standpoint, also remember that not all client libraries or environments handle extremely large text blobs gracefully — you might need to stream data or use compression techniques. Another consideration is model performance: extremely long prompts can sometimes dilute the model’s attention. Newer models (e.g., GPT-4.1 or Claude 3) claim near-perfect recall even at max context, but you should still test how the quality holds up as you pack more information in. If the task is better served by a two-step approach (first find relevant info, then query it), that might outperform a single giant prompt in both accuracy and cost. In short, as a developer, you should use the simplest context that solves the problem — don’t default to million-token contexts for everything. But when you do need it (like processing a long user-uploaded file or maintaining state over a lengthy session), it’s a tremendous capability to leverage. Just implement it with careful logging, cost monitoring, and user experience adjustments for the inevitable slower response.

Conclusion

Ultra-large context models are game-changers for tasks that were previously impossible in a single pass (like asking an AI to analyze an entire book or a massive dataset). They serve as the AI equivalent of a deep memory dive — sometimes referred to as “deep research” mode because the model can ingest a vast amount of research material at once. Use this mode when the breadth of context is mission-critical to get a good answer. Use smaller-context (or retrieval-enhanced) approaches when the tasks are narrower or when you need snappier, cheaper interactions. By understanding token limits and their costs, you can choose the right tool for the job: balancing context depth, speed, and cost to suit each use case.

Sources

The information above is drawn from recent AI model documentation and announcements, including OpenAI’s and Anthropic’s pricing pages and context window specs, as well as Google’s discussion of Gemini’s long-context features. For example, OpenAI’s GPT-4 Turbo introduced a 128K token window in late 2023, and Google’s Gemini 1.5 Pro now supports up to 2M tokens in preview. Anthropic’s Claude models expanded from 100K to 200K tokens and are designed to eventually handle on the order of a million tokens for certain partners. These extended context capabilities come with engineering innovations (and caching mechanisms to manage repeated costs) but also highlight the classic trade-off: more context = more tokens = higher cost and latency. Pricing examples (Anthropic’s $3/$15 per million token rates, OpenAI’s $2/$8 per million) illustrate how providers are now using the million-token unit as a standard for billing and comparison. In essence, tokens have become a currency of AI work, and like any currency, you’ll want to spend them wisely.

Want more premium content like this? This comprehensive token analysis is exclusive to paying First AI Movers Pro subscribers.

Upgrade today to access weekly deep dives, technical guides, and strategic AI insights that keep you ahead of the curve. Join 1,000+ professionals making smarter AI decisions with our premium content.

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.